Click Here if you watched/listened. We’d love to know what you think. There is even a spot for feedback!

Surveying the Surveys

By: Tracy Farone

Many of my pre-health professions and pre-graduate students must take a statistics course in order to graduate, whether they like it or not. Navigating the commonly quoted “lies, damn lies, and statistics” is an important part of becoming a competent scientist. Valid data is critical to all sciences and research. Natural sciences and social sciences rely on collecting data to make intelligent recommendations and choices in just about every aspect of life. However, how we select, collect, analyze, interpret and present that data is subject to all types of challenges and bias.

Surveys are a commonly used modality for gathering data. Surveys are relatively cheap, quick and easier to perform compared to experimental or many observational studies. However, surveys have several limitations including many types of what we call “bias” in science. For example, a quote from a scientific article on survey characteristics.

“Compared with traditional methods, conducting online survey research in a virtual community is easier, less expensive in most cases, and timelier in reaching populations. However, disadvantages exist, from sampling errors, low response rates, self-selection, to deception. Researchers should carefully consider limitations when writing their results. Also, some journals are hesitant to publish surveys due to poor quality.”(1)

Beekeeping has largely relied on surveys to provide data for our industry. The USDA NASS survey and the US Beekeeping Survey, previously known as the BIP survey, have provided the bulk of US national data on honey bees and their beekeepers. These surveys, while a great improvement over data (or lack thereof) that existed just a few decades ago, have been plagued with low response rates, lack of funding, an enormous amount of simultaneously occurring variables and the challenge of comparing the very different groups of sideline, backyard and commercial beekeeping management groups that all exist in the same industry. Some states, state apiary programs, universities, colleges and local bee clubs may also conduct their own surveys, providing smaller pockets of regional or local data.

Much like a science major, for beekeepers, it is helpful to understand how surveys work and what they can and cannot provide to the recipient of the data. Let us explore some of the characteristics to be aware of when evaluating any survey data base, beekeeping related or otherwise.

Variables

In science, we try to reduce the number of variables in any study. Ideally, we like to study or test one variable change at a time. If you have been involved in beekeeping for more than a year, you understand that the number of simultaneous variables (weather, economics, disease threats, forage availability, etc.) beekeepers deal with are multiple, with many out of our control. Uncontrolled variables and multiple variables make it difficult to determine which factor may be the cause or causes of any outcome.

Definitions

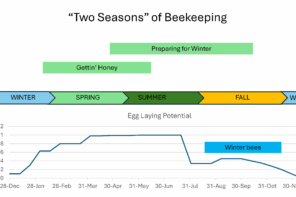

A common question I get from students while they are taking an exam is, “Dr. Farone, what do you mean by x?” I always mean the textbook definition, yet many students may produce their own versions within their own minds. So, definition communication can be a challenge, but it is not to be assumed or overlooked. A consistent, clear definition of data collected in survey questions is critical to ensure equal and comparable information. Words and phrases used to describe any data point collected in a survey must be well defined. For example, do Fall combinations count as Winter or annual “hive loss”? The simple terms or phrases like “mite treatment” or “Winter,” may mean a wide variety of things to a wide variety of beekeepers.

Case description

If a certain condition is to be reported, clear criteria that define that situation as “a case.” This ensures standardization of reporting. If a case description is too vague it will lead to a wide array of opinions and inconsistent, hard to interpret, or incorrect data. For example, fever, cough, fatigue, body aches = Influenza… or is that COVID-19…or wait (maybe E. coli, Lyme Disease, Cat-scratch disease, early HIV, pollen allergies….and hundreds of other things). See my point?

Various expertise

The ability to provide accurate answers to survey questions will vary by the survey respondents’ level of expertise (for example the ability to accurately diagnose a disease), and subjective opinions. We know that beekeeping has a wide variety of expertise, abilities and opinions within our ranks.

n

“n” is the number of subjects evaluated or surveyed. This is a pet peeve of mine. I have seen many a published study throwing out all types of conclusions, but if you take the time to read the “Materials and Methods” part of the study buried within the belly of the report, you may find they did the study on 20 subjects, representing a population of millions. Meh.

The validity or power of a study’s data heavily relies on “n” being a significant number of subjects to provide reliable data for a given population. Larger n’s are almost always better. Most surveys like to get response rates of at least 20% or more of a population. Too much extrapolation can lead to the wrong application of sample data. For example, if you would like to study 5000 honey bee colonies and get 50 data responses, that is not very representative (1%). However, if 1000 respond (20%), we are getting into significant territory.

Bias

Bias in scientific studies comes in many types. Bias can also happen at various stages of the study including, pre-study prep/planning, during the study and after the study is complete in the analysis process. Wording of questions should be carefully reviewed, so not to “lead” respondents’ answers or induce emotional responses. Reviewing a study to predict which type of bias it may be prone to is helpful in evaluating the weight of the study’s results and conclusions. Let us look at some specific types of bias and examples that survey data is commonly subject to.

- Non-response bias

In a study with a low response rate, non-response bias occurs when the people who choose to respond to the survey are systematically different from those who do not, potentially skewing the results and making the results unrepresentative of the overall population being studied. This can happen due to factors like unwillingness to participate, an incentive or non-incentive to respond or inability to respond to certain questions. An example of this would be mostly beekeepers with big losses responding to a survey and those with little or no losses lacking a response or only sick people (not healthy) responding to a study of the general population. - Selection bias

When the sample taken of respondents is not representative of the target population, it leads to skewed data. An example of this would be a survey of only backyard beekeepers with the intention of evaluating the entire industry or only selecting beekeeping data primarily from one state to represent a national conclusion. - Voluntary response bias

When only individuals with strong opinions or motivations to participate respond, leading to an unrepresentative sample. An example of this would be taking a national political poll in Washington D.C. (D.C. has the highest number of registered democrats in the U.S.). - Incentive bias is a type of voluntary response bias and when a survey respondent has a financial gain for providing a specific response. It is never a bad investigative idea to follow the money…

- Social desirability bias

Respondents may provide answers they believe are socially acceptable rather than their true opinions, especially when sensitive topics are involved. This type of bias is common in interview type surveys or group surveys where identity may be known. - Acquiescence bias

A tendency for respondents to agree with statements regardless of their actual beliefs, leading to biased results. Being a good sheep or not wanting to stir the pot. - Extreme responding bias

When respondents consistently choose the most extreme answer options on a scale, distorting the data. This type of bias occurs especially for survey questions that illicit emotional responses from two opposing groups.(2)

PS on Project Apis m surveys and the ongoing USDA investigation

When I conceptualize a topic and then compose the actual article, this may happen 3-4 months before publication in Bee Culture. Little did I know what was coming… As I finalize this article topic on surveys, the Project Apis m surveys have just hit the news. Results are concerning but still very early in evaluation, with surveys still open as I write this. It will be several months, maybe even years before we understand the actual impact (true losses, fiscal impact, pollination losses, crop losses) of what may or may not be happening in the industry. I am pleased to hear that there is an excellent team of trained investigators already conducting extensive scientific testing into the possible and multiple causes of the surveys’ concerns. Certainly, the beekeeping industry faces more and more challenges and are often unrecognized and underappreciated for the agricultural necessity that they are. However, we must be careful to collect the facts of the situation and develop ways to fight true threats backed with solid data that we can present and use to identify lasting solutions within the industry. Hopefully, we all know more at the time you read this.

Resources and References

- Goodfellow LT. An Overview of Survey Research. Respir Care. 2023 Sep;68(9):1309-1313. doi: 10.4187/respcare.11041. Epub 2023 Apr 18. PMID: 37072162; PMCID: PMC10468179.

- Correspondence with Dr. Enzo Campagnolo DVM, MPH, retired CDC officer, PA Department of Health and PA State Veterinarian. All bias definitions. Feb 20, 2025.

Pannucci CJ, Wilkins EG. Identifying and avoiding bias in research. Plast Reconstr Surg. 2010 Aug;126(2):619-625. doi: 10.1097/PRS.0b013e3181de24bc. PMID: 20679844; PMCID: PMC2917255.

https://static1.squarespace.com/static/650342507631075013d25a2c/t/67b7a09cd206e90a8eb35a68/1740087456272/PAm+survey+summary+feb+20vf.pdf Accessed 3-5-25.

https://www.facebook.com/story.php/?story_fbid=1047013274125778&id=100064513459334 Accessed 3-5-25.

https://www.projectapism.org/ Accessed 3-5-25.

https://www.qualtrics.com/en-gb/experience-management/research/survey-bias/ Accessed 3-5-25.